By Gilad Shainer, Mellanox Technologies

The ever-growing demand for higher performance in the world of supercomputing requires interconnect solutions provide increasingly faster speeds, extreme low latency and continuous additions of smart offloading and acceleration engines. In a parallel computing environment, the interconnect is the computer and the heart of the datacenter. Proprietary networks cannot meet the aforementioned needs over time, leading to a maximum of a three to five-year lifespan for a proprietary network. In the past, extending the lifetime of the network was possible; however, with the exponential growth of data expected to be analyzed today, together with the increased simulation complexity and integration of artificial intelligence and deep learning into High Performance Computing (HPC), the effective lifetime of networks has been shrinking over time, and is expected to continue to decline.

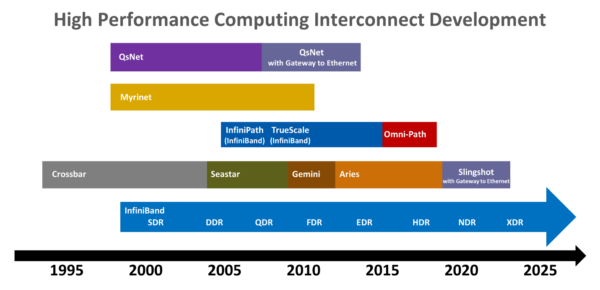

Figure 1 – High Performance Computing Interconnect Development

We can categorize networking technologies into two groups – proprietary-based technologies (such as Aries, Slingshot, Omni-Path) and standards-based technologies such as InfiniBand and RoCE. There are several challenges that need to be addressed when creating a new proprietary protocol. One issue is the need to re-create the required software ecosystem, including software drivers, operating system support, creation of communication libraries, and support of applications vendors or open source groups. This is a very expensive and long process, and, if required to be done every three years, it is a huge burden for HPC end-users. Another challenge with proprietary networks includes re-inventing the basic networking structure and capabilities repeatedly, placing an unnecessary burden not only on the companies doing so, but on their funding agencies as well.

When it comes to standards-based interconnects, these problems do not exist. In the case of InfiniBand, each capability introduced in a previous generation is carried into future generations, making each generation backward and forward compatible. The Quality of Service (QoS) capability, an inherent part of the InfiniBand specification, has been in existence since the first generation and is being leveraged and optimized from one speed generation to the next. Each software driver, communication framework, native inbox support within the various operating systems, and application optimizations and tools, continue to utilize hardware support over time, and therefore deliver the highest return on investment for their developers and users.

It is no surprise that basic network elements, such as QoS or Congestion Control, are promoted as the highlight of new proprietary interconnect technologies as they are re-invented over and over again with each new iteration. These “new” benchmarks created for basic elements effectively demonstrate superfluous efforts in recreating network structures.

These network elements are already an integral part of enduring standard technologies. Efforts invested in these areas are not wasted; rather, they enable innovation to improve performance, scalability and robustness. One area of such innovation is the development of smart In-Network Computing engines, which builds upon the existing standard to provide higher resiliency for supercomputers. For example, the Mellanox Scalable Hierarchical Aggregation and Reduction Protocol (SHARP)™ technology improves the performance of MPI and deep learning operation by offloading collective operations and data reduction algorithms from the CPU or GPU to the switch network and eliminating the need to send data multiple times between endpoints. Another example is the Mellanox SHIELD technology that improves the network with self-healing capabilities.

The early generations of InfiniBand brought the support for full network-transport offload and RDMA capabilities that enable faster data movement, lower latency and the dramatic reduction in CPU utilization for the sake of networking operations (which translates into more CPU cycles that can be dedicated to the actual application runtime). Later, RDMA capabilities were extended to support GPUs as well, enabling both a ten-fold reduction in latency and a ten-fold increase in bandwidth.

Standards-based technologies like InfiniBand and RoCE provide not only world-leading performance and scalability, but also protect past investments and ensure forward compatibility, leading to best return on investment. For more information on InfiniBand and RoCE’s capabilities and advantages, visit our About InfiniBand page.